Sep 27

/

Benjamin Schumann

Do you test your model? Improve it with this one simple function

YOU ARE TESTING YOUR MODEL, RIGHT? GREAT! LET ME SHOW YOU HOW INCREASE YOUR TESTING EFFICIENCY, MAKE YOUR MODEL CODE LOOK ELEGANT AND EVEN ENABLE YOUR CLIENTS TAKE ADVANTAGE OF YOUR TESTING.

Congratulations, you just added a really cool new feature to your simulation model. Well done, your client/boss will love it. Of course, you are excited to test if it actually works. How do you actually do that? Most likely, you apply one of these four methods, each with some drawbacks:

- You just check your model visually while running it. First, you need very good and detailed animation. Then, you must trust it (i.e. it should be tested already). But even then, it is really hard to observe your new intricate feature directly. Plus, it is very time-consuming.

- You write a couple of “traceln” statements (if you live in Java) that print model information to a console while the model is running. However, this clutters your code and your console as you will always see these outputs. Unless, you disable the traceln-statements and only enable them when you want to test them. Very tiresome, and still messy code

- You decide to analyse the output data and try to infer if your new feature actually worked. Now, this is a sensible way forward but what if your feature is actually not captured by output data? Or if data analysis would be very complicated?

- You are a crack and apply dedicated unit testing like JUnit for Java (which you can add to AnyLogic). Well, this is certainly the best way, I won’t have much to teach you here J. However, this is quite complicated to implement and conceptually challenging. So if you look for a simpler way, keep reading.

Why you need better testing

Obviously, you want to know if your new feature actually works. So you test it. But there is more: your client/boss wants to be convinced of the model so they want to see proof of the tests it has gone through. Sometimes, clients want to conduct their own testing. And what if you will add more features to the model next week? Will your current feature still work as it should? Or did other things mess it up? What if the client provides a new data input set with unexpected information? Does it still work?

For these reasons, it is important to have a structured, repeatable approach to your model testing. Let’s see how to achieve that in AnyLogic (or any Java program). You can reproduce the approach in any object-oriented modelling language.

The magic ingredient: a dedicated function

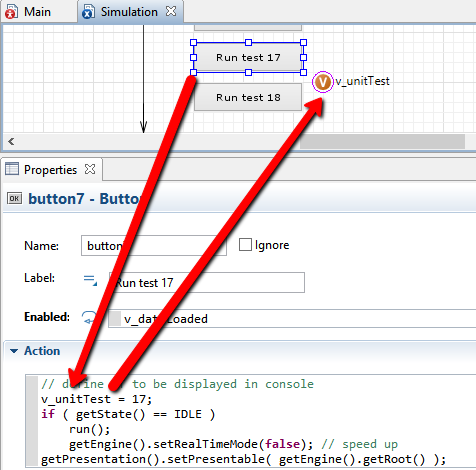

First, create an integer parameter “param_testID” on your main. Make your experiments feed it an integer value that the user defines. This could happen using a button as below:

As the user clicks this button, the model is started but your “param_testID” parameter on the Main now takes the given test value (17 here).

Create a new function on your Main called “test”. Give it four input arguments:

Create a new function on your Main called “test”. Give it four input arguments:

- testID (int): defines for which test ID to display text

- pause (Boolean): pauses the model if true

- eventLocation (String): where do you call this function from?

- eventMsg (String): your actual test message (what is happening)

Code it as below:

Now you can use this function anywhere in your model to print specific test messages only for specific tests. They will only appear in the console when you want it and you do not need to worry about turning them on/off. Your code will look more organized as any testing code will start with calling this new function. Plus, even clients could conduct testing now if you tell them to “click the start-test-17 button and check the console messages”.

THIS IS JUST ONE OF MANY WAYS OF CREATING YOUR PERSONALIZED “UNIT TESTING”. YOU CAN EXTEND THIS FUNCTION OR CREATE YOUR OWN. YOU COULD EXPORT THE MESSAGES TO EXTERNAL FILES OR ADD MORE COMPLICATED FILTERS. I’D BE INTERESTED TO HEAR HOW YOU ORGANIZE YOUR OWN TESTING. HAVE YOU CREATED SIMILAR “HOME-GROWN” METHODS?